Ultimate Guide to ChatGPT 101: What is it, How does it work, and How to Increase Productivity with It (before it takes over our lives) - Part 1

The arrival of ChatGPT in 2022 marked a historic moment as humanity relinquishes sovereignty to AI for efficiency and productivity. In this post, we explore what ChatGPT is and the mechanics of ChatGPT.

Hi All,

In 17th-century Europe, people relinquished individual sovereignty to ‘governments’ in the form of ‘social contracts’ (Think Leviathan!) in exchange for protection and safety.

Now, humanity is about to relinquish our sovereignty to AI in exchange for efficiency and productivity. This is a moment in history. With the arrival of ChatGPT on Nov 30, 2022, the world as we have known came to an end.

Some note this is equivalent to the invention of the wheel or even fire! Yet its ramification is far-reaching and unknown; we have to navigate carefully.

The characteristics of AI - including its capacities to learn, evolve, and surprise - will disrupt and transform them all. The outcome will be the alteration of human identity and the human experience of reality at levels no experienced since the dawn of the modern age. - except from 'the Age of AI: And Our Human Future' by Henry Kissenger, Eric Schmidt, Daniel Huttenlocher

Conspiracy theorists would say this was pre-warned by 'the Age of AI and Our Human Future' (in the book, AI is called 'synthetic humans') and 'Fourth Industrial Revolution.'

Look how spot-on Arthur Clarke was on the future of AI!

Prescient https://t.co/Sw9fjbLnJR

— Elon Musk (@elonmusk) March 27, 2023

Side note - Déjà vu: I also wrote a couple of posts back in 2021 on AI for my newsletter and fast forward, I am amazed by what we (the general public) are presented with today. How come we didn't know about this?! I will post that again here and would love to get your thoughts on AI and ChatGPT! There are ongoing debates re: ethics, copyrights, data privacy, national security issues and the future of humanity.

What's covered in this two-part post:

- What is ChatGPT?

- First things first, what versions are currently available?

- ChatGPT Mechanics - How does it work?

- Concept cheatsheets - I asked ChatGPT to help me answer

- Hallucination

- ChatGPT training mechanism - pros and cons (will be covered in part 2)

- What's next for ChatGPT (will be covered in part 2)

- ChatGPT Goldrush - How can I use it to increase my productivity? (will be covered in part 2)

- Other recommended resources (will be covered in part 2)

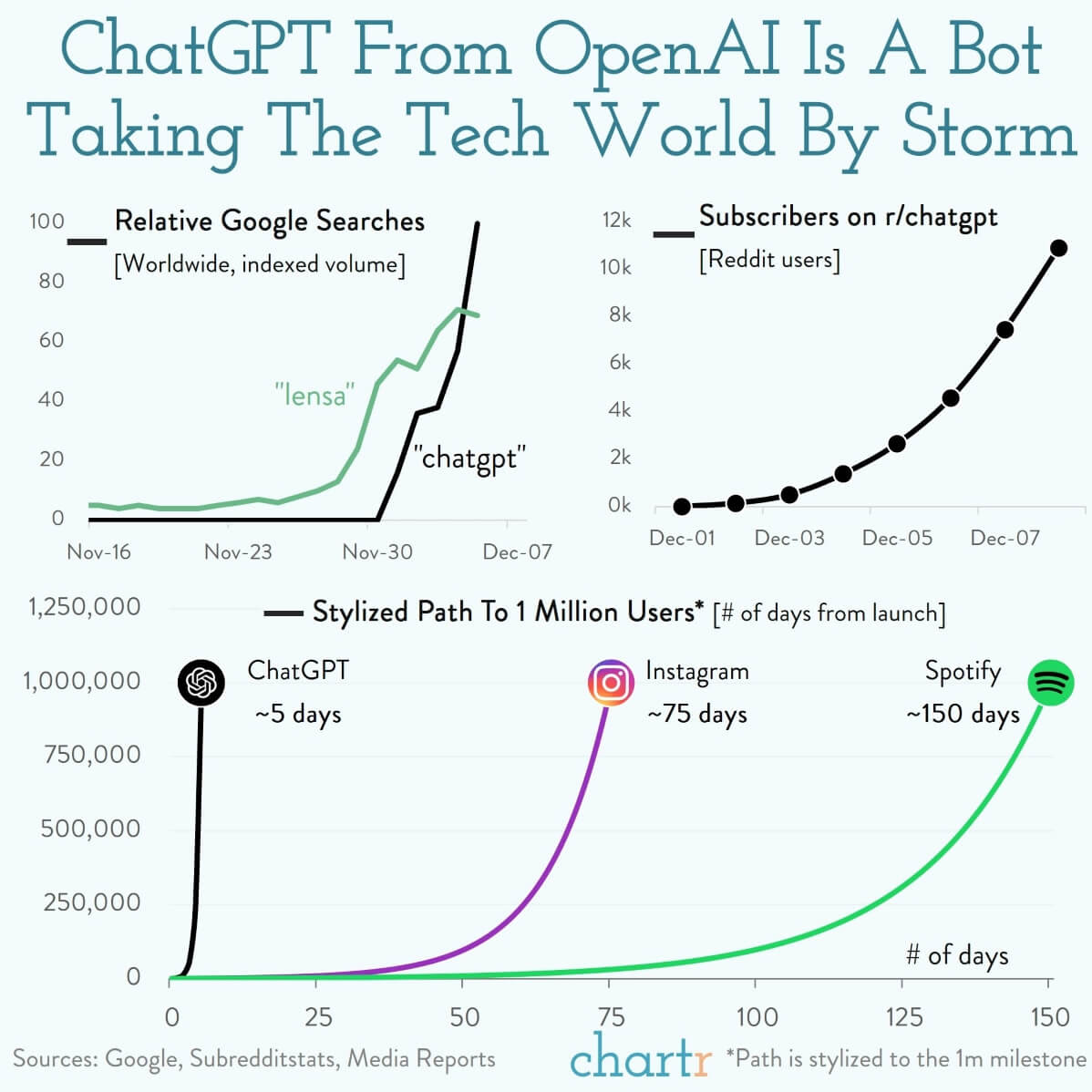

Indeed, below two charts show how sensational the ChatGPT's arrival has been.

I. What is ChatGPT?

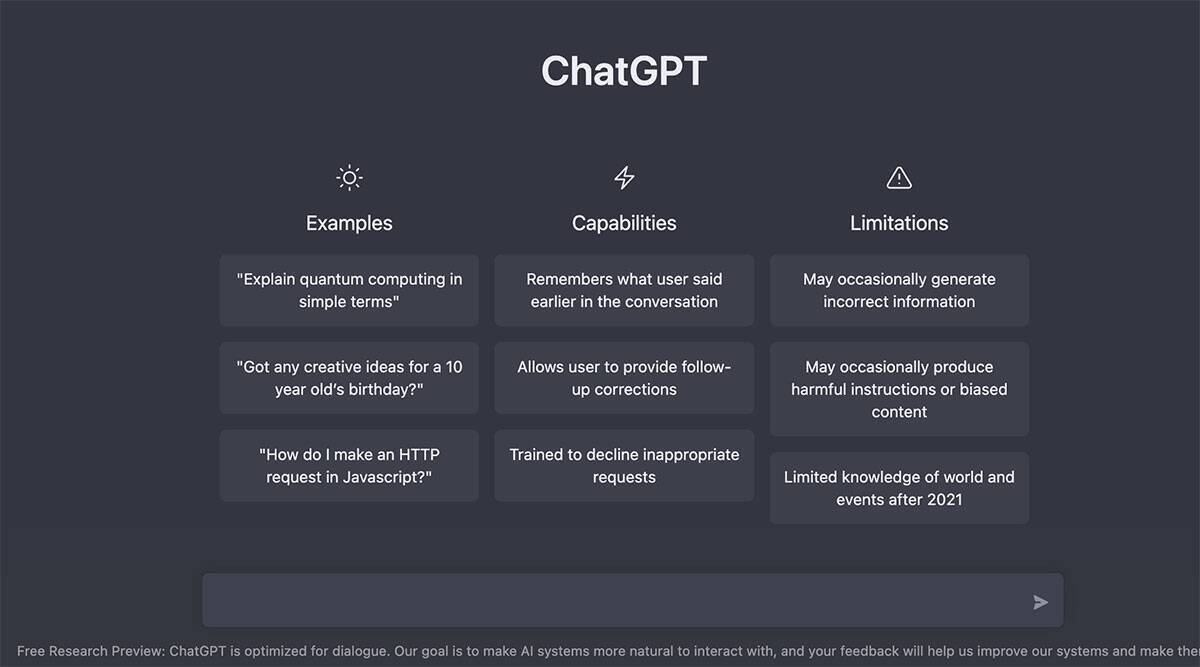

ChatGPT is an advanced artificial intelligence (AI) language model that uses deep learning techniques to understand and generate human-like responses to natural language inputs. It is part of the GPT (Generative Pre-trained Transformer) family of models, which have been developed by OpenAI.

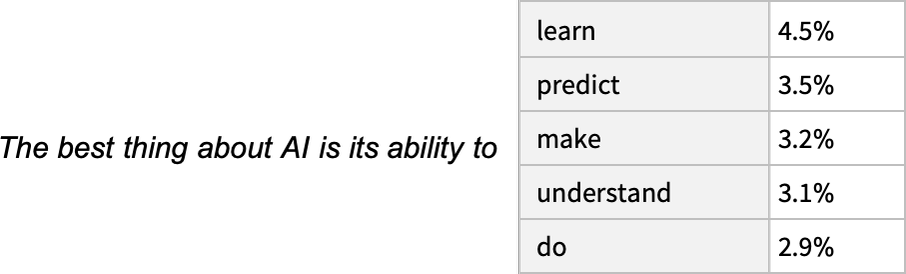

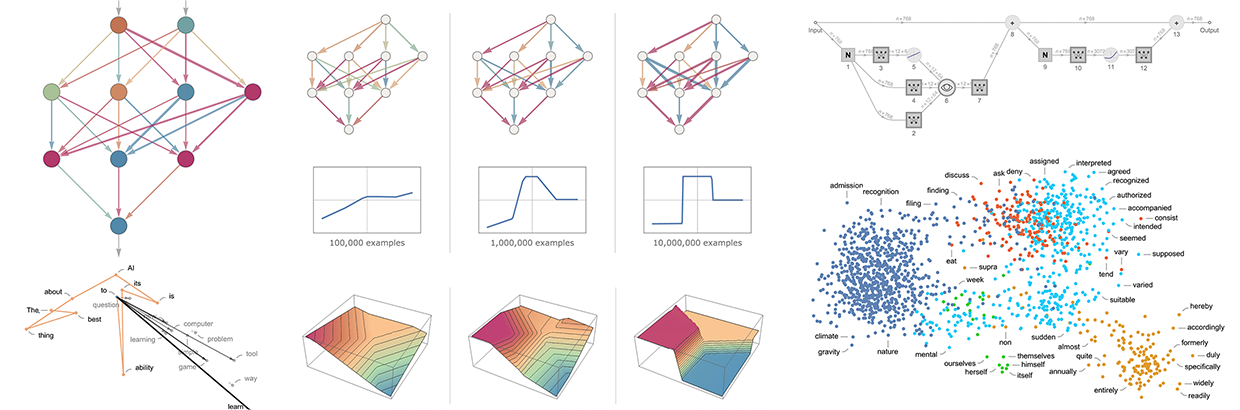

In layman's terms, it can be described as an advanced auto-complete prompt based on probabilities. Similar to the drop-down options that pop up when we search for a keyword in 'Google', ChatGPT is literally thinking about what to say next following the previous word based on the probabilistic model i.e. ChatGPT finds the most likely word to come next based on the 'pattern recognition' by studying lots of texts.

So in a way, ChatGPT is like a talking robot isolated in a room that you can ask questions to, just like how you might ask a question to another person. But instead of talking to a real person, you're talking to a computer program that has been trained to understand and respond to your questions in a helpful way.

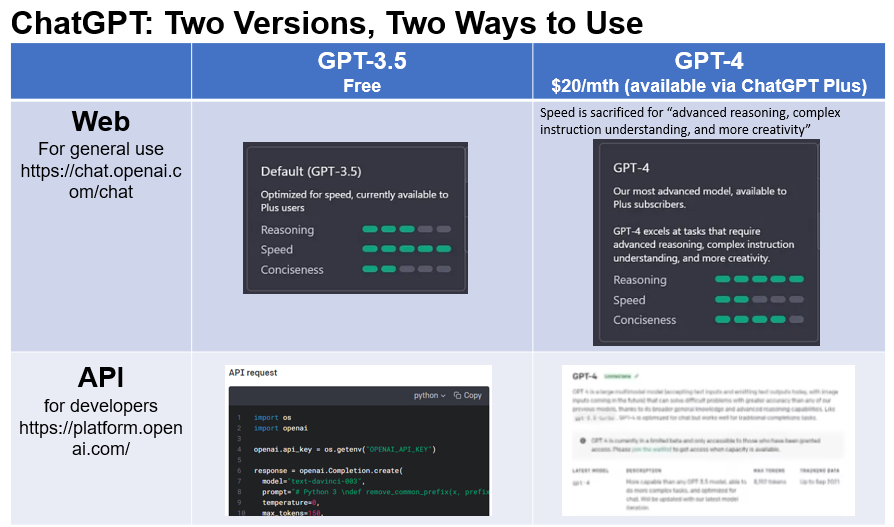

II. First things first, what versions are currently available?

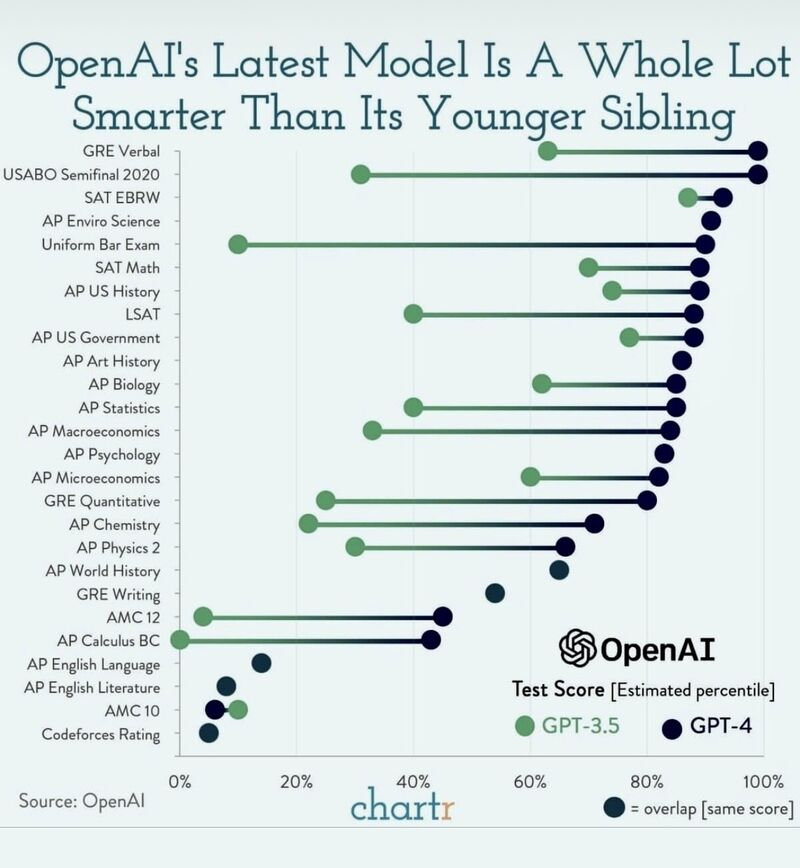

There are two versions available at the moment. GPT-3.5 (free) and GPT-4 ($20/mth, available in a paid version - 'ChatGPT Plus'). GPT-4 API for developers is available via a waitlist. You can also use Microsoft's Bing to access GPT-4 for free.

You can check out OpenAI's whitepaper and website for the 'official' comparison.

III. ChatGPT Mechanics - How does it work?

Whether GPT-3.5 or GPT-4, at the end of the day, these are what's called 'large language models*'.

GPT = A model trained on a large language data set

ChatGPT = A model that GPT has been trained to converse with humans.

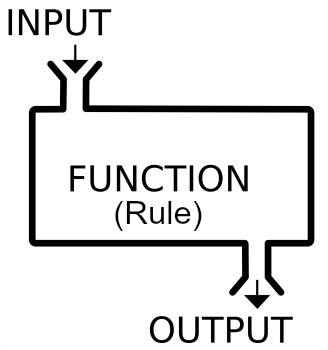

In a way, you can think of 'large language model' as a function, which is a term used in math and computer science to describe a process that takes in some input and produces an output.

In the case of ChatGPT, the input might be a prompt or a question, and the output would be a block of text that attempts to answer the prompt or continue the conversation in a coherent and sensible way. Just like a function in math, ChatGPT will produce the same output for a given input every time, as long as it's operating in the same conditions.

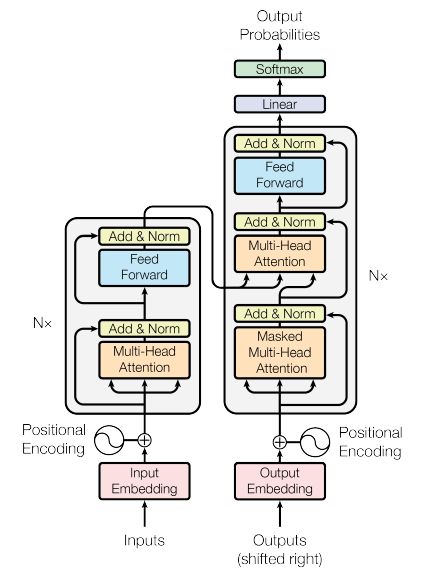

And, GPT stands for Generative Pre-trained Transformer.

- Generative (AI): the ability of the model to generate new text or language based on its training data and the input provided by the user rather than simply identifying or classifying existing text. This ability to generate new text makes GPT models highly versatile and useful for a wide range of natural language processing tasks, such as language translation, summarization, and question-answering.

- Pre-trained**: GPT models are initially trained on a large dataset of text inputs before being fine-tuned for specific tasks. The pre-training process is designed to give the model a broad understanding of language and context, which allows it to generate high-quality text outputs even when faced with new or unfamiliar inputs.

- Transformer: Google is the father of transformer. It is a type of neural network architecture Google developed to process and generate natural language. It is designed to process sequential data, such as text, and is particularly well-suited to modeling long-range dependencies and contextual relationships within text inputs. The transformer architecture was introduced in a Google paper by Vaswani et al. (2017) and has since become a popular choice for many natural language processing (NLP) tasks, including language translation, question-answering, and text generation.

What sets ChatGPT apart from other chatbots and virtual assistants is its ability to generate responses that are contextually relevant and linguistically sophisticated. It achieves this by using a combination of 'pre-training' and 'fine-tuning' processes, as well as advanced 'machine learning' techniques such as transfer learning and attention mechanisms.

In summary, GPT stands for Generative Pre-trained Transformer, and refers to a family of language models developed by OpenAI. These models are capable of generating new text outputs, are initially trained on a large dataset of text inputs before being fine-tuned for specific tasks, and use the Transformer neural network architecture to process long sequences of text inputs more efficiently.

IV. Concept cheatsheets - I asked ChatGPT to help me answer

Okay, you probably have loads of questions after reading this. Let's break them down one by one.

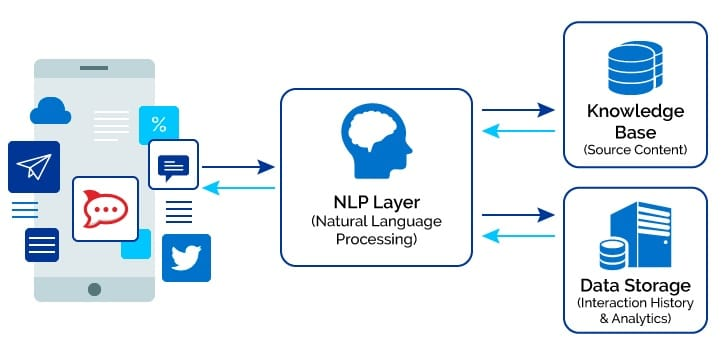

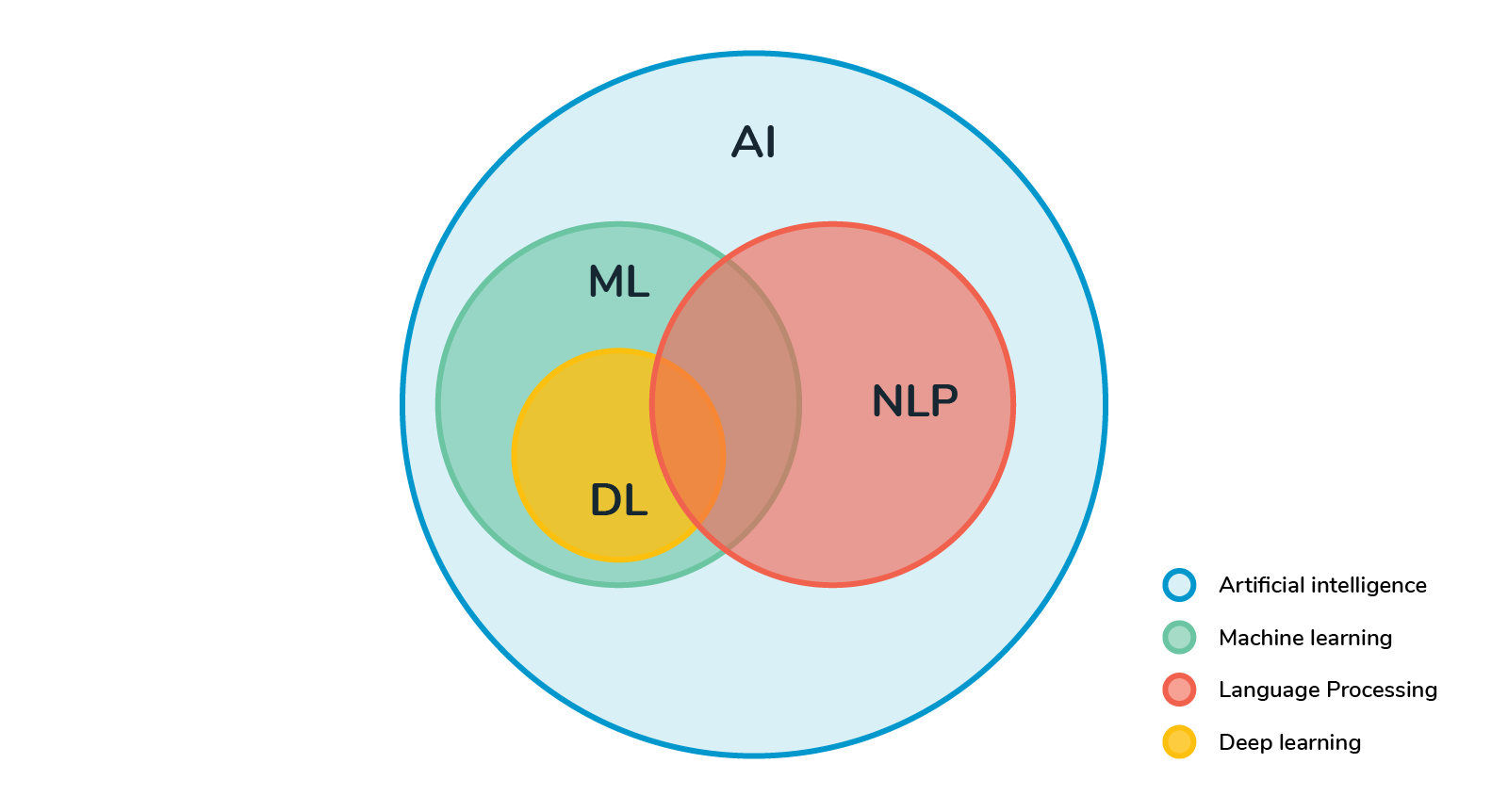

1) What is a Natural Language Program (NLP)?

A: Natural language programming (NLP) is a type of computer programming that enables computers to understand and generate human language. It involves using artificial intelligence (AI) and machine learning (ML) techniques to process and analyze text, speech, and other forms of natural language input.

NLP is used in many applications that involve language processing, such as chatbots, virtual assistants, and language translation tools. These applications use NLP to understand human input and generate relevant responses.

For example, if you ask a chatbot a question, the chatbot will use NLP to analyze the text of your question and generate an appropriate response based on its understanding of the language. Similarly, if you use a language translation tool, the tool will use NLP to analyze the text of the input language and generate an accurate translation in the desired output language.

Overall, NLP is a powerful tool that allows computers to interact with humans in a more natural and intuitive way, making it a key area of research in artificial intelligence and computer science.

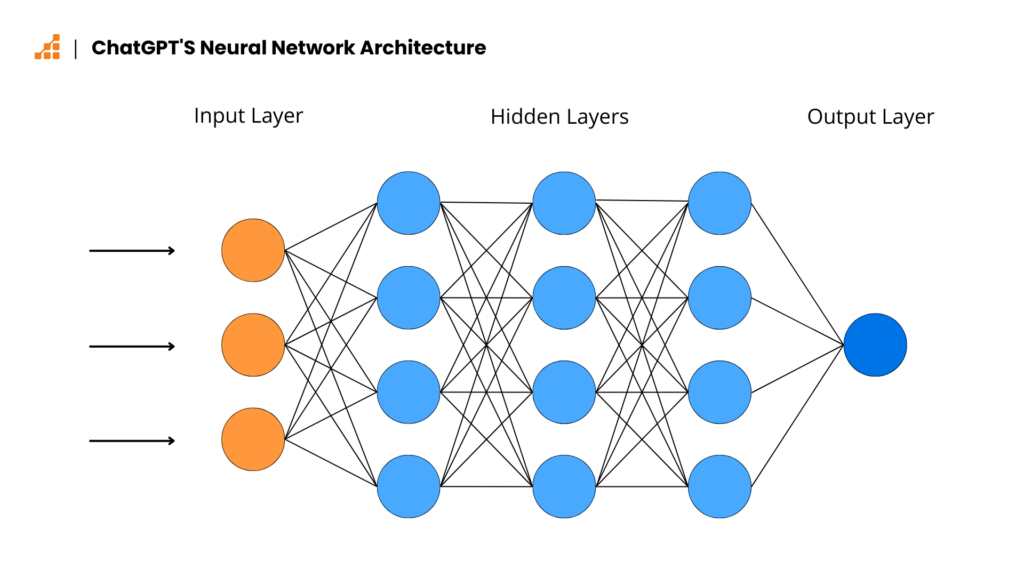

2) What is A Neural Network?

A: When we talk about a "neural network," we're referring to a type of computer system that's modeled after the human brain. It can learn and make predictions based on data it's been trained on. The structure or design of a neural network is called its "neural network architecture." Similar to how the human brain has different regions for different functions, a neural network has layers of interconnected nodes that process and transform input data to output predictions. The architecture determines factors like the number of layers, nodes in each layer, and connections between them, all of which can affect the network's ability to learn and make accurate predictions.

Different neural network architectures are better suited for different types of tasks. For instance, a convolutional neural network (CNN) is often used for image recognition because it excels at identifying patterns in visual data. Conversely, a recurrent neural network (RNN) is better suited for sequential data, like text or speech, because it can remember information from previous inputs.

To summarize, a neural network architecture serves as the blueprint for how a neural network is structured and influences how effectively it can learn and make predictions from data. If you want to learn more, check out my other blog post on the topic.

You can check out my other blog post on this for more.

ChatGPT is using the neural network architecture from Google called 'Transformer' architecture. Google published an academic research paper that introduced the Transformer architecture, "Attention Is All You Need," in 2017. The paper proposed a new neural network architecture for language modeling that uses attention mechanisms to more efficiently process long sequences of text inputs.

3) What are pre-training and fine-tuning?

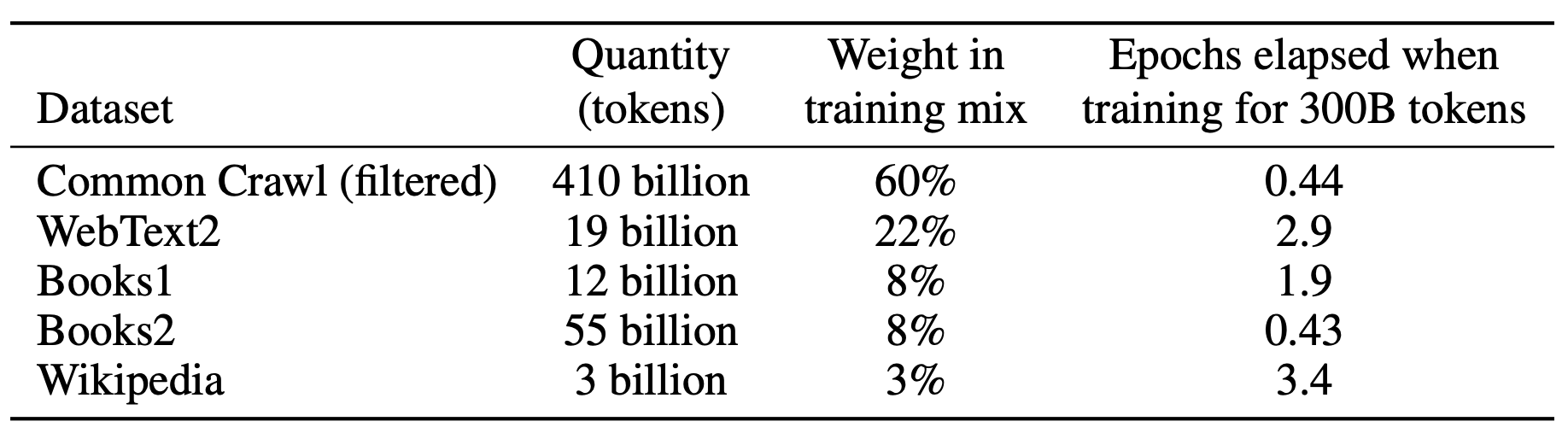

A: In the case of GPT models, the pre-training process involves training the model on a massive dataset of text inputs, often hundreds of gigabytes or more in size. The datasets used for pre-training GPT models typically consist of a wide range of text sources, such as books, news articles, and web pages, and are designed to provide the model with a broad understanding of language and context.

The datasets used for pre-training GPT models are often selected to be representative of the types of text inputs the model is likely to encounter in its intended applications, such as social media posts, customer service chats, or news articles. These datasets can also be filtered to remove certain types of content, such as profanity or sensitive topics, to ensure that the model produces appropriate outputs.

4) What is machine learning?

Machine learning is a type of computer programming that allows machines to learn and improve from experience, without being explicitly programmed to do so. This means that machines can learn from data and adjust their behavior accordingly, without human intervention.

To do this, machine learning algorithms are fed large amounts of data, which they use to learn patterns and make predictions or decisions based on that data. Over time, the machine's performance improves as it continues to learn from new data.

In practical terms, machine learning is used in many applications that you may already be familiar with, such as voice assistants like Siri or Alexa, personalized product recommendations on websites like Amazon, and fraud detection in financial transactions.

V. Hallucination

As I mentioned earlier, chatGPT can be a great liar. Sometimes it will generated texts that are semantically or syntactically plausible but in fact incorrect or nonsensical. In short, you can't trust what the machine is telling you, so 'fact checking' is extremely important!

![AI Foundational Series - Part 2] Machine Learning vs. Deep Learning vs. AGI](https://images.unsplash.com/photo-1601132359864-c974e79890ac?crop=entropy&cs=tinysrgb&fit=max&fm=jpg&ixid=M3wxMTc3M3wwfDF8c2VhcmNofDJ8fHJvYm90c3xlbnwwfHx8fDE3NDMzNTM3Mzd8MA&ixlib=rb-4.0.3&q=80&w=720)

![AI Foundational Series - Part 1] What Is AI? A Historical and Conceptual Overview](https://images.unsplash.com/photo-1677756119517-756a188d2d94?crop=entropy&cs=tinysrgb&fit=max&fm=jpg&ixid=M3wxMTc3M3wwfDF8c2VhcmNofDZ8fGFpfGVufDB8fHx8MTc0MTQ2Mjc5Mnww&ixlib=rb-4.0.3&q=80&w=720)